Chat in OpenRAG

After you upload documents to your knowledge base, you can use the OpenRAG Chat feature to interact with your knowledge through natural language queries.

The OpenRAG Chat uses an LLM-powered agent to understand your queries, retrieve relevant information from your knowledge base, and generate context-aware responses. The agent can also fetch information from URLs and new documents that you provide during the chat session. To limit the knowledge available to the agent, use filters.

The agent can call specialized Model Context Protocol (MCP) tools to extend its capabilities. To add or change the available tools, you must edit the OpenRAG OpenSearch Agent flow.

Try chatting, uploading documents, and modifying chat settings in the quickstart.

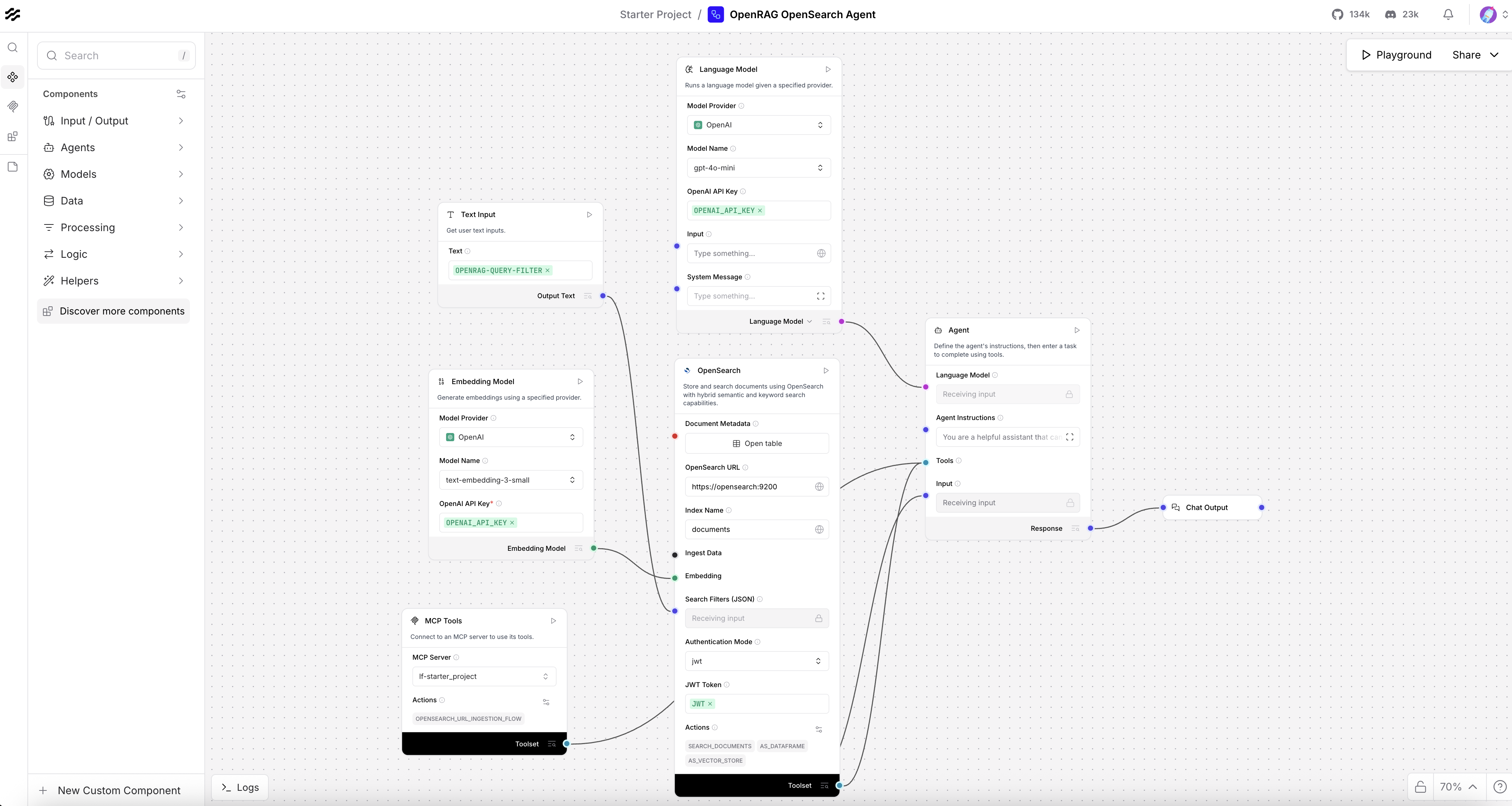

OpenRAG OpenSearch Agent flow

When you use the OpenRAG Chat, the OpenRAG OpenSearch Agent flow runs in the background to retrieve relevant information from your knowledge base and generate a response.

If you inspect the flow in Langflow, you'll see that it is comprised of eight components that work together to ingest chat messages, retrieve relevant information from your knowledge base, and then generate responses. When you inspect this flow, you can edit the components to customize the agent's behavior.

-

Chat Input component: This component starts the flow when it receives a chat message. It is connected to the Agent component's Input port. When you use the OpenRAG Chat, your chat messages are passed to the Chat Input component, which then sends them to the Agent component for processing.

-

Agent component: This component orchestrates the entire flow by processing chat messages, searching the knowledge base, and organizing the retrieved information into a cohesive response. The agent's general behavior is defined by the prompt in the Agent Instructions field and the model connected to the Language Model port. One or more specialized tools can be attached to the Tools port to extend the agent's capabilities. In this case, there are two tools: MCP Tools and OpenSearch.

The Agent component is the star of this flow because it powers decision making, tool calling, and an LLM-driven conversational experience.

How do agents work?

Agents extend Large Language Models (LLMs) by integrating tools, which are functions that provide additional context and enable autonomous task execution. These integrations make agents more specialized and powerful than standalone LLMs.

Whereas an LLM might generate acceptable, inert responses to general queries and tasks, an agent can leverage the integrated context and tools to provide more relevant responses and even take action. For example, you might create an agent that can access your company's documentation, repositories, and other resources to help your team with tasks that require knowledge of your specific products, customers, and code.

Agents use LLMs as a reasoning engine to process input, determine which actions to take to address the query, and then generate a response. The response could be a typical text-based LLM response, or it could involve an action, like editing a file, running a script, or calling an external API.

In an agentic context, tools are functions that the agent can run to perform tasks or access external resources. A function is wrapped as a Tool object with a common interface that the agent understands. Agents become aware of tools through tool registration, which is when the agent is provided a list of available tools typically at agent initialization. The Tool object's description tells the agent what the tool can do so that it can decide whether the tool is appropriate for a given request.

-

Language Model component: Connected to the Agent component's Language Model port, this component provides the base language model driver for the agent. The agent cannot function without a model because the model is used for general knowledge, reasoning, and generating responses.

Different models can change the style and content of the agent's responses, and some models might be better suited for certain tasks than others. If the agent doesn't seem to be handling requests well, try changing the model to see how the responses change. For example, fast models might be good for simple queries, but they might not have the depth of reasoning for complex, multi-faceted queries.

-

MCP Tools component: Connected to the Agent component's Tools port, this component can be used to access any MCP server and the MCP tools provided by that server. In this case, your OpenRAG Langflow instance's Starter Project is the MCP server, and the OpenSearch URL Ingestion flow is the MCP tool. This flow fetches content from URLs, and then stores the content in your OpenRAG OpenSearch knowledge base. By serving this flow as an MCP tool, the agent can selectively call this tool if a URL is detected in the chat input.

-

OpenSearch component: Connected to the Agent component's Tools port, this component lets the agent search your OpenRAG OpenSearch knowledge base. The agent might not use this database for every request; the agent uses this connection only if it decides that documents in your knowledge base are relevant to your query.

-

Embedding Model component: Connected to the OpenSearch component's Embedding port, this component generates embeddings from chat input that are used in similarity search to find content in your knowledge base that is relevant to the chat input. The agent uses this information to generate context-aware responses that are specialized for your data.

It is critical that the embedding model used here matches the embedding model used when you upload documents to your knowledge base. Mismatched models and dimensions can degrade the quality of similarity search results causing the agent to retrieve irrelevant documents from your knowledge base.

-

Text Input component: Connected to the OpenSearch component's Search Filters port, this component is populated with a Langflow global variable named

OPENRAG-QUERY-FILTER. If a global or chat-level knowledge filter is set, then the variable contains the filter expression, which limits the documents that the agent can access in the knowledge base. If no knowledge filter is set, then theOPENRAG-QUERY-FILTERvariable is empty, and the agent can access all documents in the knowledge base. -

Chat Output component: Connected to the Agent component's Output port, this component returns the agent's generated response as a chat message.

Nudges

When you use the OpenRAG Chat, the OpenRAG OpenSearch Nudges flow runs in the background to pull additional context from your knowledge base and chat history.

Nudges appear as prompts in the chat, and they are based on the contents of your OpenRAG OpenSearch knowledge base. Click a nudge to accept it and start a chat based on the nudge.

Like OpenRAG's other built-in flows, you can inspect the flow in Langflow, and you can customize it if you want to change the nudge behavior. However, this flow is specifically designed to work with the OpenRAG chat and knowledge base. Major changes to this flow might break the nudge functionality or produce irrelevant nudges.

The Nudges flow consists of Embedding model, Language model, OpenSearch, *Input/Output, and other components that browse your knowledge base, identify key themes and possible insights, and then produce prompts based on the findings.

For example, if your knowledge base contains documents about cybersecurity, possible nudges might include Explain zero trust architecture principles or How to identify a social engineering attack.

Upload documents to the chat

When using the OpenRAG Chat, click Add in the chat input field to upload a file to the current chat session. Files added this way are processed and made available to the agent for the current conversation only. These files aren't stored in the knowledge base permanently.

Inspect tool calls and knowledge

During the chat, you'll see information about the agent's process. For more detail, you can inspect individual tool calls. This is helpful for troubleshooting because it shows you how the agent used particular tools. For example, click Function Call: search_documents (tool_call) to view the log of tool calls made by the agent to the OpenSearch component.

If documents in your knowledge base seem to be missing or interpreted incorrectly, see Troubleshoot document ingestion or similarity search issues.

If tool calls and knowledge appear normal, but the agent's responses seem off-topic or incorrect, consider changing the agent's language model or prompt, as explained in Inspect and modify flows.

Integrate OpenRAG chat into an application

You can integrate OpenRAG flows into your applications using the OpenRAG SDKs (recommended) or the Langflow API.

It is strongly recommended that you use the OpenRAG SDKs to interact with OpenRAG programmatically or integrate OpenRAG into an application. This is because OpenRAG passes specific configuration settings to the Langflow API when it runs a flow or conducts a chat session. For more information, see the following:

Use the Langflow API (Not recommended)

To trigger a flow with the Langflow API, you can get pre-configured code snippets directly from the embedded Langflow visual editor. However, these snippets don't include OpenRAG-specific configuration settings, so the behavior won't be identical to using OpenRAG directly.

The following example demonstrates how to generate and use code snippets for the OpenRAG OpenSearch Agent flow:

-

Open the OpenRAG OpenSearch Agent flow in the Langflow visual editor:

- In OpenRAG, click Settings.

- Click Edit in Langflow, and then click Proceed to launch the Langflow visual editor.

- If Langflow requests login information, enter the

LANGFLOW_SUPERUSERandLANGFLOW_SUPERUSER_PASSWORDfrom your OpenRAG.envfile.

-

Optional: If you don't want to use the Langflow API key that is generated automatically when you install OpenRAG, you can create a Langflow API key. This key doesn't grant access to OpenRAG; it is only for authenticating with the Langflow API.

- In the Langflow visual editor, click your user icon in the header, and then select Settings.

- Click Langflow API Keys, and then click Add New.

- Name your key, and then click Create API Key.

- Copy the API key and store it securely.

- Exit the Langflow Settings page to return to the visual editor.

-

Click Share, and then select API access to get pregenerated code snippets that call the Langflow API and run the flow.

These code snippets construct API requests with your Langflow server URL (

LANGFLOW_SERVER_ADDRESS), the flow to run (FLOW_ID), required headers (LANGFLOW_API_KEY,Content-Type), and a payload containing the required inputs to run the flow, including a default chat input message.In production, you would modify the inputs to suit your application logic. For example, you could replace the default chat input message with dynamic user input.

- Python

- TypeScript

- curl

import requests

import os

import uuid

api_key = 'LANGFLOW_API_KEY'

url = "http://LANGFLOW_SERVER_ADDRESS/api/v1/run/FLOW_ID" # The complete API endpoint URL for this flow

# Request payload configuration

payload = {

"output_type": "chat",

"input_type": "chat",

"input_value": "hello world!"

}

payload["session_id"] = str(uuid.uuid4())

headers = {"x-api-key": api_key}

try:

# Send API request

response = requests.request("POST", url, json=payload, headers=headers)

response.raise_for_status() # Raise exception for bad status codes

# Print response

print(response.text)

except requests.exceptions.RequestException as e:

print(f"Error making API request: {e}")

except ValueError as e:

print(f"Error parsing response: {e}")const crypto = require('crypto');

const apiKey = 'LANGFLOW_API_KEY';

const payload = {

"output_type": "chat",

"input_type": "chat",

"input_value": "hello world!"

};

payload.session_id = crypto.randomUUID();

const options = {

method: 'POST',

headers: {

'Content-Type': 'application/json',

"x-api-key": apiKey

},

body: JSON.stringify(payload)

};

fetch('http://LANGFLOW_SERVER_ADDRESS/api/v1/run/FLOW_ID', options)

.then(response => response.json())

.then(response => console.warn(response))

.catch(err => console.error(err));curl --request POST \

--url 'http://LANGFLOW_SERVER_ADDRESS/api/v1/run/FLOW_ID?stream=false' \

--header 'Content-Type: application/json' \

--header "x-api-key: LANGFLOW_API_KEY" \

--data '{

"output_type": "chat",

"input_type": "chat",

"input_value": "hello world!"

}' -

Copy your preferred snippet, and then run it:

- Python: Paste the snippet into a

.pyfile, save it, and then run it withpython filename.py. - TypeScript: Paste the snippet into a

.tsfile, save it, and then run it withts-node filename.ts. - curl: Paste and run snippet directly in your terminal.

- Python: Paste the snippet into a

If the request is successful, the response includes many details about the flow run, including the session ID, inputs, outputs, components, durations, and more.

In production, you won't pass the raw response to the user in its entirety. Instead, you extract and reformat relevant fields for different use cases, as demonstrated in the Langflow quickstart. For example, you could pass the chat output text to a front-end user-facing application, and store specific fields in logs and backend data stores for monitoring, chat history, or analytics. You could also pass the output from one flow as input to another flow.